Introduction In the dynamic and margin-pressured world of modern healthcare, Artificial Intelligence (AI) is no longer a fringe experiment—it is a critical investment. However, for every CEO and COO managing a healthcare organization, the imperative remains the same: demonstrate a clear, quantifiable Return on Investment (ROI). Automating operations with AI for the sake of automation […]

Introduction

Fast-growing mid-market is under unprecedented pressure to “do something with AI”—but lack impartial assessment, internal expertise, and clear ROI. This urgency is driving decision-makers to seek flexible, healthcare-informed external AI partners over generic SaaS or risky DIY paths.

Why MSOs and TPAs Are Feeling the AI Squeeze?

- 📌 Investor & payor pressure. Boards and payors want to see an AI roadmap—now. Gartner projects agentic AI will guide 15% of decisions by 2028, pushing leadership teams to act fast.

- 💸 Rising SaaS “AI uplift” fees. EHR and HRIS vendors are charging 30–50% more for built-in AI features—often without transparency, HIPAA assurance, or real customization.

- 🔒 Black-box AI risk. MSO and TPA teams can’t afford decisions made by models they can’t audit. Most SaaS AI lacks explainability, PHI safeguards, or integration flexibility.

- ⚠️ Stalled in-house pilots. Many internal AI efforts fail due to staffing, unclear ROI, or infrastructure gaps. Mid-market organizations aren’t resourced to build AI alone.

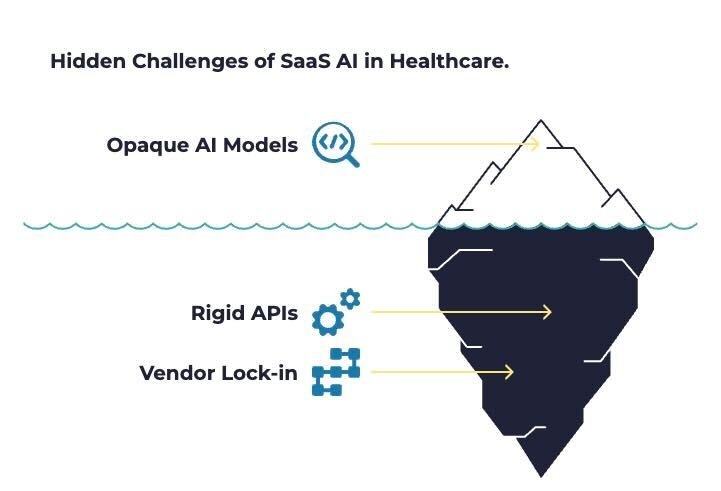

Why Black Box AI and SaaS Uplift Fees Hurt AI Strategy

| Issue | Impact |

|---|---|

| Opaque models | SaaS vendors often point to undisclosed AI; you can’t audit decisions tied to PHI, workflows, or risk assessment. |

| Rigid APIs | Plug-and-play don’t adapt to unique healthcare coding, behavior metrics, or payer workflows. |

| Vendor lock-in | Switching models—even GPT‑4 to Claude—is a monumental effort without a partner that abstracts the complexity. |

Choosing the Right AI Partner

- 👁️ Human-in-the-loop Design: Keeping operations leads involved until the model delivers real value—not after.

- ⚙️ Model-Agnostic Architecture: Swappable LLMs and private vector DBs ensure you’re never locked into a single vendor or threaten uptime with underlying API changes.

- 🔐 Guard-Rails & Explainability: Build from day one with audit logs, PHI redaction, compliance hooks, and traceability—essential for HIPAA and future CMS oversight .

- 📊 ROI‑Driven Sprints: Short sprints, clear metrics for staffing uplift, reimbursement gains, or efficiency—and kill-switch checkpoints if ROI doesn’t land.

Build vs. Buy vs. Partner: ROI of Custom AI Development in Healthcare

| Strategy | Pros | Cons |

|---|---|---|

| In-house build | Control everything | Requires deep bench: AI engineers, prompt specialists, compliance leads, UI/UX, DevOps, ongoing cost |

| Generic SaaS | Quick deployment | Lack of data ownership, auditability, customization, and portability |

| External partner (e.g., Serious Development) | Domain‑aligned teams, agile & compliant, model‑agnostic, ROI‑focused | Still requires internal support, but lower cost/risk ratio |

“Vendors are coming in saying, ‘I’ve got what you need.’” said John-David Lovelock, Gartner Analyst

But as Gartner warns, many AI solutions marketed to healthcare providers are more sizzle than substance. These platforms often underdeliver because they lack customization, clinical nuance, or alignment with HIPAA and audit expectations. That’s why growth-stage MSOs and ABA networks are increasingly looking beyond flashy SaaS add-ons—and instead choosing external partners who can build around their unique workflows, data, and regulatory context.

Leading by Example: “Re‑Engineering SaaS AI” Whitepaper Insight

Serious Development’s white‑paper outlines a 3‑phase “Opaque → Customizable → Agentic” maturity path—starting with existing vendor tools, shifting to customizable control layers, adding human validation, and ending with autonomous agents under strict governance.

Key Takeaways for Executives

Mid‑market MSO, TPA and MAPD health plan providers are in an AI squeeze: pressured to adopt, risk-averse about unknown tools, and lacking internal capabilities. The solution? A healthcare-fluent external AI partner that balances control, compliance, ROI—and delivers faster.

Frequently Asked Questions (FAQ)

What are my first steps to evaluate healthcare AI?

Start with a risk‑value matrix. Identify low‑risk, high‑impact pilots (e.g. admin automation). Measure speed, cost, ROI, and scalability before scaling.

How expensive are vendor AI “uplift” fees?

You’re often looking at 30–50% annual increases with no audit rights or ROI guarantees—locking in spend with opaque benefits.

Are in-house AI pilots worth it?

Piloting with a small group of internal experts and then expanding outward is a great way to ensure success and reduce risk.

What’s human‑in‑the‑loop AI?

Best practices are to utilize an approach where users approve AI outputs during early rollout—building confidence, capturing edge cases, and refining models.

Can I trust AI with PHI?

Only when it's built with HIPAA-compliant redaction, traceability, governance—ideally via vendor-neutral systems with guard-rail layers from day one. FUTURE‑AI guidelines recommend explainability, traceability, robustness .