Introduction In the dynamic and margin-pressured world of modern healthcare, Artificial Intelligence (AI) is no longer a fringe experiment—it is a critical investment. However, for every CEO and COO managing a healthcare organization, the imperative remains the same: demonstrate a clear, quantifiable Return on Investment (ROI). Automating operations with AI for the sake of automation […]

Introduction

Healthcare leaders are under pressure to “do AI now.” But bolting AI onto broken workflows rarely delivers value. The smarter play is fixing the foundation — connecting siloed systems, simplifying processes, and making data useful — then layering in AI strategically.

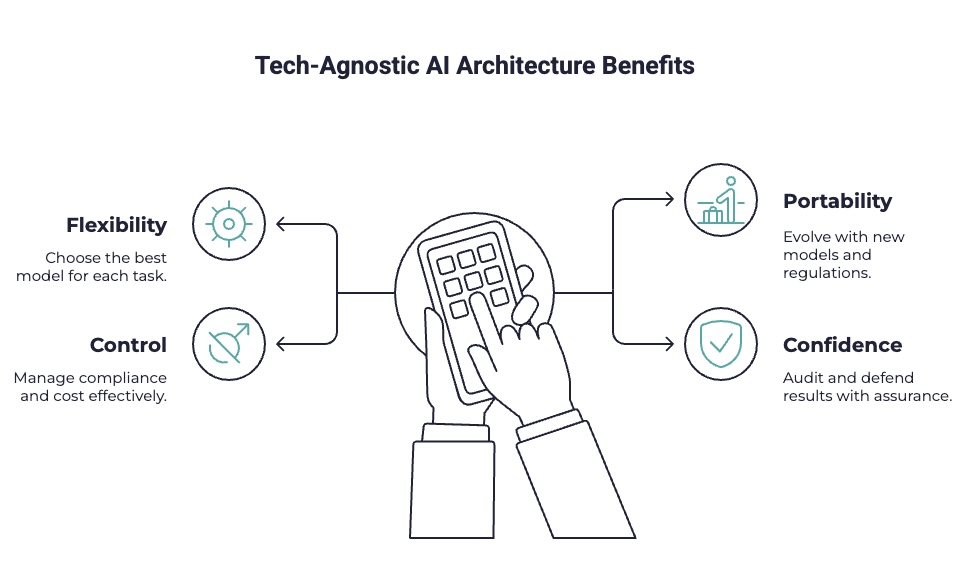

That’s where tech-agnostic AI comes in. By designing an architecture that keeps your data in your control, avoids vendor lock-in, and makes AI outputs explainable, you can adopt today’s models (Azure OpenAI, Gemini, Claude) and be ready for tomorrow’s — without costly rebuilds.

Why Portability Matters for Healthcare Leaders

- Avoid vendor lock-in: Swapping models should take hours, not months.

- Protect compliance: Keep PHI and rules in your environment, not vendor clouds.

- Stay cost-efficient: As model costs drop, you can move to cheaper, better options.

- Enable scale: Add AI where it helps most — member portals, intake, claims triage — while keeping workflows stable.

Anatomy of a Flexible AI Layer

- Connected Systems

Portals, EHRs, Salesforce, EZ-Cap — integrated so data flows instead of stalling in spreadsheets.

Exec takeaway: Without connected systems, it’s just a new coat of paint on a crumbling foundation. - Private Vector Databases

Store your payer rules, SOPs, and clinical manuals as embeddings in your environment. AI then grounds outputs in your playbook.

Exec takeaway: Your AI should align with your policies, not the internet’s. - Model Control Panels (MCPs)

Think of this as the AI “switchboard.” Route tasks to the best model — GPT-4o for one task, Claude for another as an example.

Exec takeaway: MCPs keep you model-neutral and future-proof. - Explainable AI (XAI)

Compliance officers, boards, and CMS don’t just want results — they want reasons. Explainability layers make AI audit-ready.

Exec takeaway: If you can’t explain it, you can’t defend it.

Case Study: AI-powered SMS and Email Reminder System Platform

The ChallengeInefficient Manual Processes: Staff relied on manual phone calls, which consumed significant time and resources.

High No-Show Rates: Missed appointments led to inefficiencies in scheduling and revenue loss.

Patient Engagement: Limited communication channels resulted in lower patient satisfaction.

The Solution

Serious Development implemented a customized SMS and Email Reminder App, integrated with CentralReach and powered by AI + RPA. The system automated nearly every reminder workflow, enabling two-way communication, HIPAA-compliant data handling, and empathetic AI-driven responses.

Key Highlights:

- 📲 Automated reminders via SMS, email, and voice

- 🔁 Two-way communication for confirmations and reschedules

- 🤖 RPA integration feeding updates directly into CentralReach

- 🧠 AI-based message handling for non-standard replies (“My child is sick…”)

- 📊 Enhanced reporting to monitor cancellation trends

Implementation:

Deployed across multiple sites in just 8 weeks, with staff training and in-app tutorials for smooth adoption.

Results:

✅ 25 % drop in cancellations within 3 months

⏱ 90 % reduction in scheduler time (hours to minutes)

💬 Higher client satisfaction (+25 % positive feedback)

📈 Better utilization of authorized hours through automated rescheduling

The Outcome

This solution transformed appointment management from a manual, error-prone process into a fully automated, scalable system—boosting efficiency, compliance, and client experience across the network.

ROI Checklist for Healthcare Leaders

| Action | Benefit |

|---|---|

| Swap per-seat SaaS for usage-based model pricing | 25–50% AI cost savings |

| Ground outputs in private vector Data Warehouse | Compliance and data control |

| Enable model swaps without rebuilding | Avoid six-figure transition costs |

| Automate reporting/intake with AI where it fits | Faster cycle times, lower admin burden |

Executive Summary

AI isn’t optional — but how you adopt it is. The question is whether you’ll let vendors dictate the terms, or whether you’ll control the models, data, and workflows.

A tech-agnostic AI architecture — built on connected systems, private vector DBs, model control panels, and explainability layers — gives you a Confidence in AI decisions you can defend and also:

For ABAs, MAPD health plans, MSOs, and TPAs, the lesson is clear: fix the foundation, then apply AI strategically. Leaders who invest in this now won’t just save money — they’ll preserve agility, compliance, and trust for the long haul.

Ready to move from pilot to proven?

Frequently Asked Questions (FAQ)

How do you switch AI models without retraining?

Use a Model Control Panel that decouples workflows from model architecture.

What’s a private vector database, and why does it matter?

It stores your organization’s SOPs, manuals, payer rules as embeddings—available for AI to reference securely, avoiding external data leakage.

Why is explainable AI essential in healthcare?

It creates transparency—critical for audits, regulatory review, and building clinician trust.

What’s the risk of vendor lock-in?

Loss of financial agility, compliance control, and innovation opportunities.

Can multiple AI models be used simultaneously?

Yes—MCPs orchestrate best-fit models per task dynamically.

Source References

- Gartner – Healthcare Delivery Predictions for 2024 and Beyond

stefanini.com summary of Gartner report - Ramp – AI Is Getting Cheaper: 75% Decline in Token Costs

ramp.com - Business Insider – Sam Altman: AI Costs Will Drop Tenfold Every Year

businessinsider.com - Forbes – Explainable AI Is Trending—And Here’s Why

forbes.com - Forbes – Explainable AI in Health Care: Gaining Context Behind a Diagnosis

forbes.com